Docker

What is Virtualization?

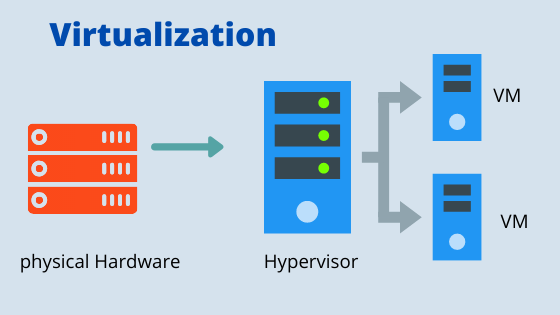

Virtualization allows us to share a single physical resource among multiple customers and organizations. In simple words running multiple operating systems on a computer system simultaneously. Virtualization provides several benefits, including improved resource utilization, scalability, flexibility, cost savings, easier management, enhanced security, and disaster recovery capabilities.

Why do we need virtualization?

Let's say a company has a physical server with powerful hardware specifications, including multiple CPUs, a large amount of RAM, and abundant storage capacity. However, they have only installed a single application on this server, which does not fully utilize the available resources and we are wasting lots of resources which is an example of using the system inefficiently.

So, to use system resources efficiently the concept of virtualization comes in.

what is a virtual machine - It allows you to create a separate and isolated environment on your computer, which can run its own operating system and software applications. With the help of virtual machines, we can use all available resources efficiently.

To create a virtual machine, you need virtualization software, also called a hypervisor

Hypervisor - It is software that creates and runs virtual machines (VMs). A hypervisor allows one host computer to support multiple guest VMs by virtually sharing its resources, such as memory and processing.

You can download VirtualBox from this link

This same concept is followed by AWS, Azure or any cloud platform, what AWS do they build a data centre in any specific region and they install its own physical server there (millions of servers ) we can request 1 virtual machine (EC2) from AWS and request will be sent to these data centre and from millions of physical server one virtual machine is assigned to us

What is a container?

A container is a lightweight software package that contains everything needed to run a software application. It includes the application's code, dependencies, libraries, and settings.

Containers allow applications to run consistently across different systems, providing isolation, portability, and efficiency.

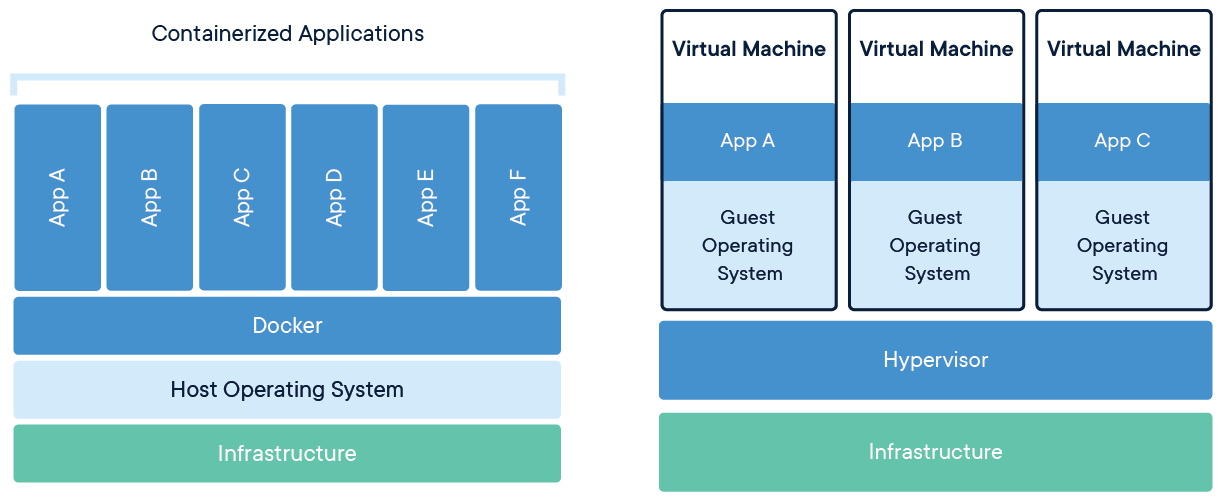

The container is lightweight because they share the kernels of the host operating system. which means they do not require a separate operating system installation

Why do we need a container when we have a virtual machine?

The problem of the physical server is solved by a virtual machine but suppose in an organization there are 1000 virtual machines and each of them is wasting some of the resources it will be a major loss for the organization

So to solve this problem the concept of a container comes in. They will effectively use your virtual machine so you can say that the problem of the physical server is solved by a virtual machine and the problem of the virtual machine is solved by containers

But virtual machines are more secure than containers because the virtual machine has a full operating system which means they have complete isolation and other hand container they do not have a complete operating system there will be logical isolation but it is not complete isolation

To create a container you need a containerization platform like docker just like to create a VM you need a virtualization platform or hypervisor

You can create a container on top of virtual machines and the other is to create a container on top of a physical server, the most used model is using a container on top of a virtual machine the VM provided by cloud providers

Container vs virtual machine

They both are the technologies which is used to isolate applications and their dependencies, but they have some key differences

Resource Utilization: Containers share the host operating system kernel, making them lighter and faster than VMs. VMs have a full-fledged OS and hypervisor, They provide a higher level of separation and flexibility compared to containers but come with the overhead of running full operating systems.

Portability: Containers are designed to be portable and can run on any system with a compatible host operating system. VMs are less portable as they need a compatible hypervisor to run.

Security: VMs provide a higher level of security as each VM has its own operating system and can be fully isolated from the host and other VMs. Containers provide less isolation, as they share the host operating system.

Management: Managing containers is typically easier than managing VMs, as containers are designed to be lightweight and fast-moving. and with VMs, you have to maintain the entire operating system.

Why containers are lightweight?

Containers are lightweight because they share the kernels of host operating systems and resources instead of virtualizing an entire operating system like virtual machines do.

the virtual machine has a full operating system so that is why they are heavier in nature whereas containers which are lightweight in nature, you can create multiple containers on top of the virtual machine and if the containers are not running they are not going to use the resource from the kernels of your host operating system.

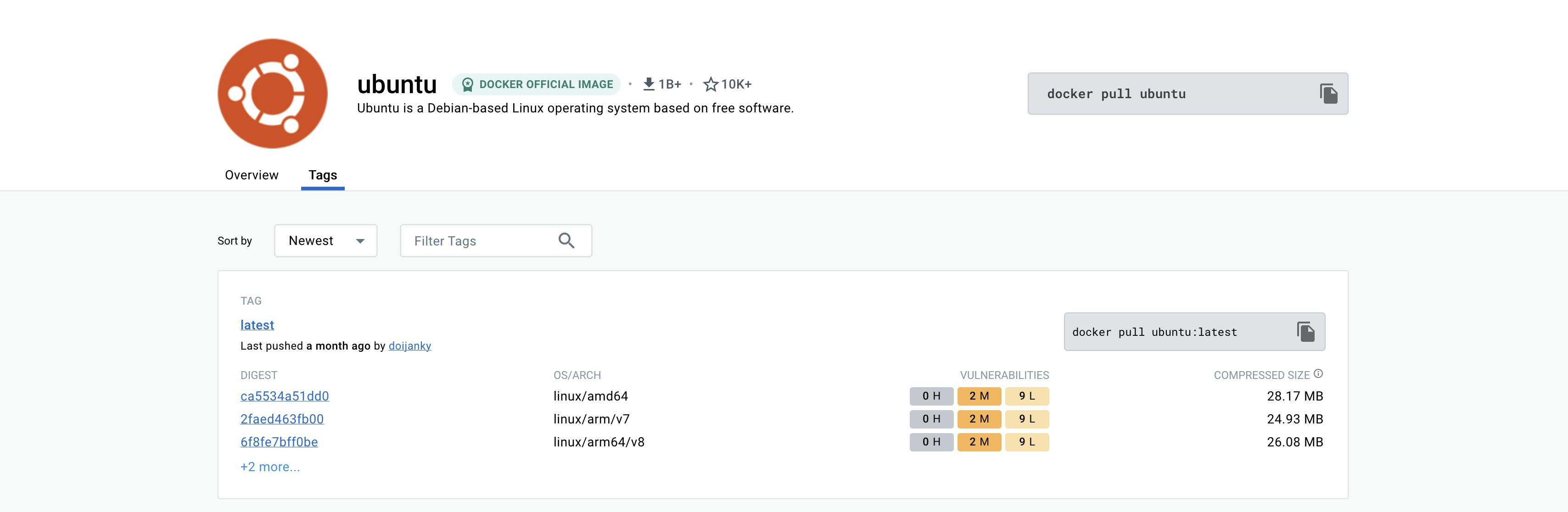

For example - The size of an Ubuntu image is just 28 MB whereas the size of an official Ubuntu image is 2.3 GB which 100x more than the image size.

So instead of running just one virtual machine, you can run 10-15 containers on the same virtual machine depending upon how many resources containers are using from the host operating system. if any containers are not in use they are not going to use any resources from the kernels. In virtual machines there will be the individual operating system the kernels used by one VM are not going to be used by other VM they are completely isolated

Why container should be isolated?

What happens when you install docker on top of your operating system, docker creates containers and containers are allowed to use system resources from the kernels. the container has a base image of OS or you can say that it has minimum system dependencies because when you have multiple containers they should be isolated.

This logical isolation is very necessary because without this logical isolation if any person/hacker has access to any specific container/Kubernetes cluster if the logical isolation is not there he can easily get access to all the containers which are present in the Kubernetes cluster.

This will be a huge security concern so that's why each container should be isolated. So each container has its own system dependencies and the rest of the things all container share from kernels.

Files and Folders in containers base images

/bin: contains binary executable files, such as the ls, cp, and ps commands.

/sbin: contains system binary executable files, such as the init and shutdown commands.

/etc: contains configuration files for various system services.

/lib: contains library files that are used by the binary executables.

/usr: contains user-related files and utilities, such as applications, libraries, and documentation.

/var: contains variable data, such as log files, spool files, and temporary files.

/root: is the home directory of the root user.

The container can not share these files and folders from one container to another container if they are sharing these files and folders that mean the container is not secure so, that's why these files are not used from the kernels they are basically part of container base image

Files and Folders that containers use from the host operating system

The host's file system: Docker containers can access the host file system using bind mounts, which allow the container to read and write files in the host file system.

Networking stack: The host's networking stack is used to provide network connectivity to the container. Docker containers can be connected to the host's network directly or through a virtual network.

System calls: The host's kernel handles system calls from the container, which is how the container accesses the host's resources, such as CPU, memory, and I/O.

Namespaces: Docker containers use Linux namespaces to create isolated environments for the container's processes. Namespaces provide isolation for resources such as the file system, process ID, and network.

Control groups (cgroups): Docker containers use cgroups to limit and control the amount of resources, such as CPU, memory, and I/O, that a container can access.

Docker

Docker is a containerization platform that provides easy way to containerize your applications, which means, using Docker you can build container images, run the images to create containers and also push these containers to container registries such as DockerHub

In simple words, you can understand as containerization is a concept or technology and Docker Implements Containerization.

Docker Architecture

Docker is a platform to containerize the application we will see the complete process of how docker images are pushed to a docker registry platform like Dockerhub

What is Docker daemon?

Docker daemon runs on the host operating system. It is responsible for running containers to manage docker services. Docker daemon communicates with other daemons. It offers various Docker objects such as images, containers, networking, and storage

Whenever you are running a docker cli command these commands are received by the docker daemon and after that, they are executed and create containers and images you can also push these images to Dockerhub with the help of the docker daemon

What is Docker Client?

Docker client uses commands and API to communicate with the Docker Daemon (Server). When a client runs any docker command on the terminal, the terminal sends these docker commands to the Docker daemon.

Docker Client uses Command Line Interface (CLI) to run the following commands -

docker build - with this command, the docker daemon creates a docker image.

docker pull - Pull the container from the registry

docker run - Creates a container

What is Docker Host?

The Docker host is the machine or server where the Docker engine is running. It manages and executes Docker containers, provides resources for running them, and handles networking aspects. The Docker client communicates with the Docker host to issue commands and manage containers, images, and other Docker resources.

Docker Registry

Docker Registry manages and stores the Docker images.

There are two types of registries in the Docker -

Pubic Registry - Public Registry is also called a Docker hub.

Private Registry - It is used to share images within the enterprise

So, you can say that the docker daemon is the heart of docker if the docker daemon goes down so docker will technically stop working or the docker container will stop functioning.

What is docker copy - docker copy is a command that allows you to copy files or directories between a Docker container and the local file system. It is used to transfer files into or out of a running container.

docker cp [OPTIONS] CONTAINER:SRC_PATH DEST_PATH

docker cp [OPTIONS] SRC_PATH CONTAINER:DEST_PATH

CONTAINER: Specifies the container from/to which files are being copied.SRC_PATH: Specifies the source file or directory inside or outside the container.DEST_PATH: Specifies the destination path inside or outside the container.

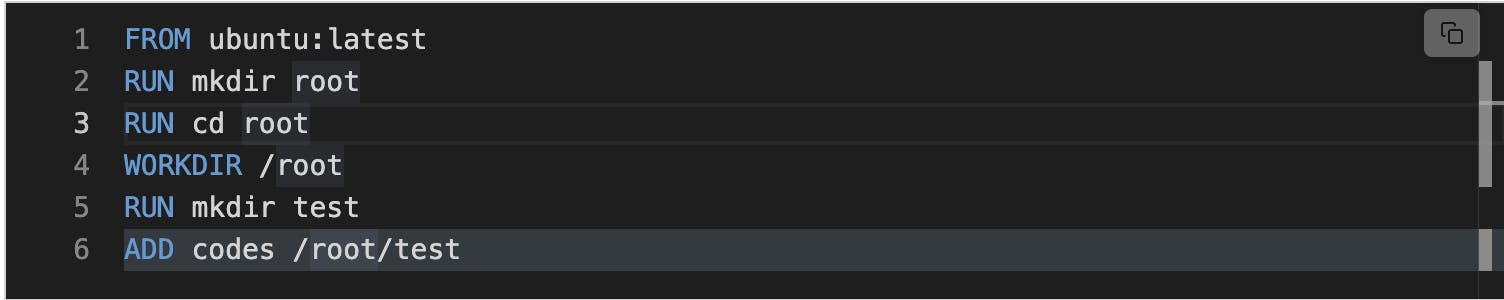

What is docker add - The ADD command is used to copy files/directories into a Docker image. It can copy data in three ways:

Copy files from the local storage to a destination in the Docker image.

Copy a tarball from the local storage and extract it automatically inside a destination in the Docker image.

Copy files from a URL to a destination inside the Docker image.

Difference between ADD and Copy?

if you need to copy files or directories from the local file system to a Docker image during the build process or,coping files or directories between a Docker container it is recommended to use the COPY instruction. Use the ADD only when you specifically need its additional features like extraction or URL support.

How to Install Docker -

Create your EC2 instance and update your Ubuntu and run these commands

sudo apt update sudo apt install docker.io -yTo check the is running or not use

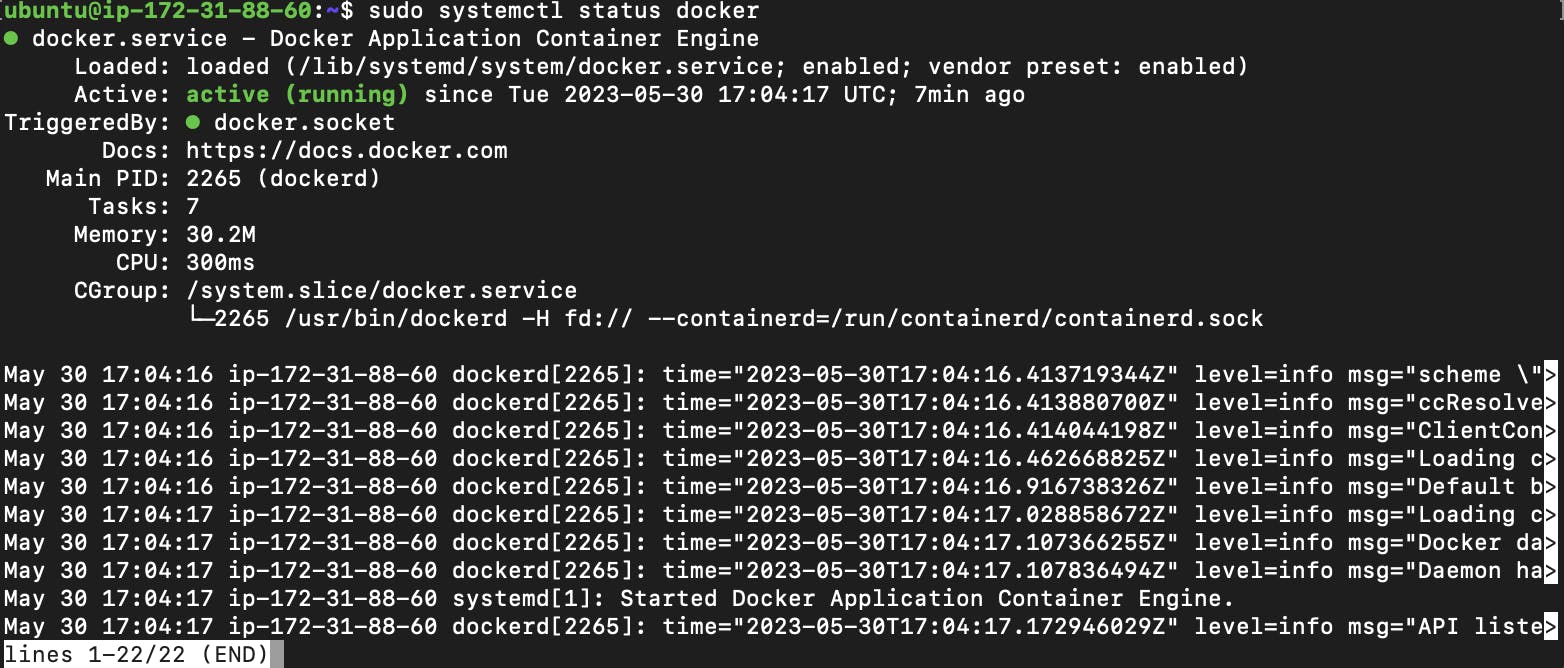

sudo systemctl status docker

To verify docker is ready to use or not

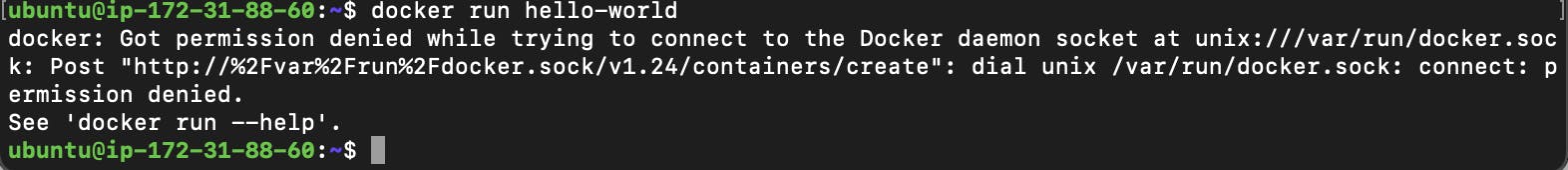

docker run hello-worldif you get this type of output like

This can mean two things,

Your user does not have access to run docker commands.

The Docker daemon is not running because you have installed docker with sudo which temporarily elevates your current user account to have root privileges and by default docker has to be installed by the root user only and docker daemon should also execute by the root user only

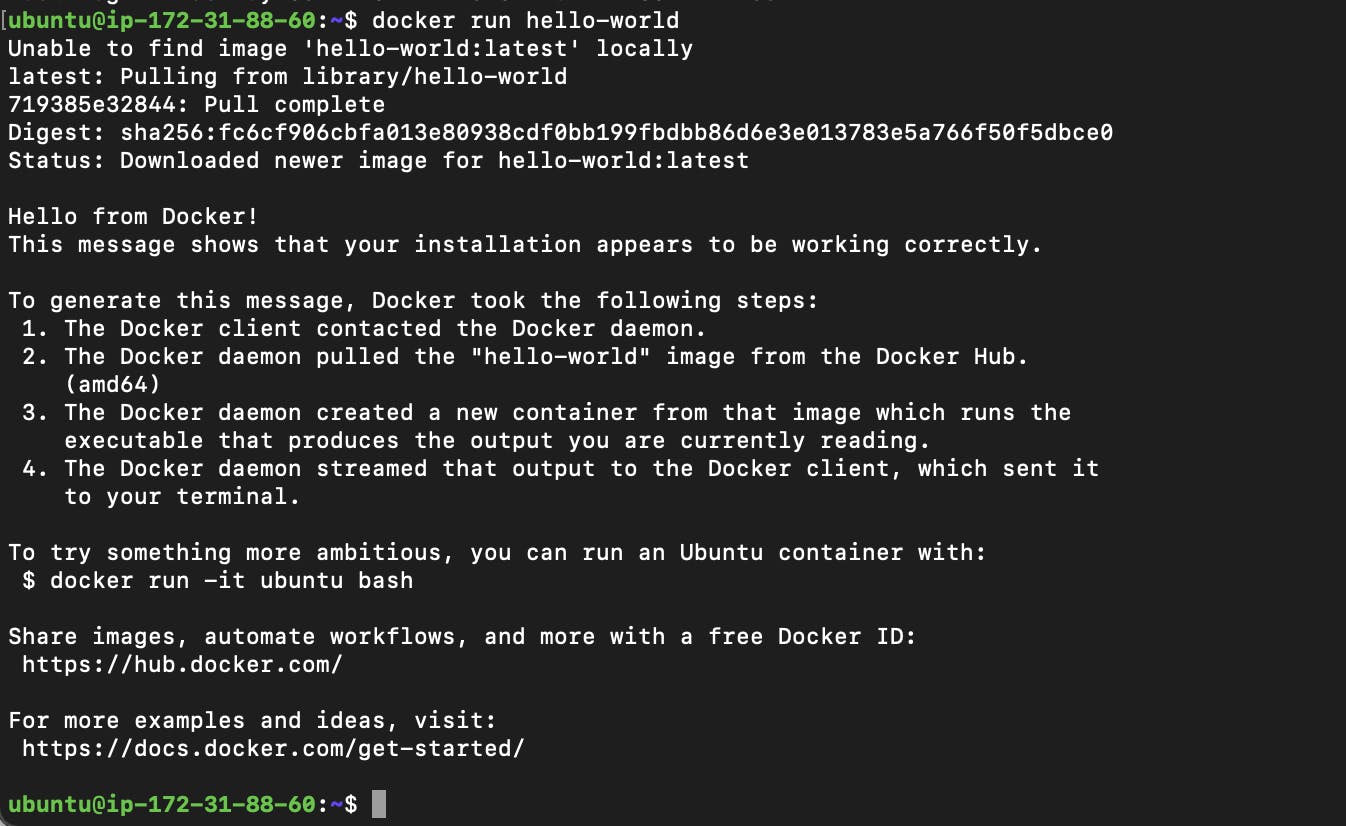

To grant access to your user to run the docker command, you should add the user to the Docker Linux group. Docker group is create by default when docker is installed.

sudo usermod -aG docker ubuntuAfter this just restart your re-login to the ec2 instance.

NOTE:

In the above command

ubuntuis the name of the user, you can change the username appropriately.You need to log out and login back for the changes to be reflected.

Now again use

docker run hello-worldyou will get output like this

Done Docker is Installed, up and running 🥳🥳

Docker Lifecycle

Before learning about the docker lifecycle first understand these terms -

What is DocketFile - Dockerfile is a set of instructions to build a Docker image, which can then be used to create and run containerized applications.

Docker image - It is a static snapshot of a Docker container. It includes everything needed to run a piece of software.

In the lifecycle of docker, the first step will be to write a docker file.

Example of docker file -

# Base image

FROM ubuntu:latest

# Set working directory

WORKDIR /app

# Copy application files to the container

COPY . /app

# Install dependencies

RUN apt-get update && \

apt-get install -y python3 && \

apt-get install -y python3-pip && \

pip3 install -r requirements.txt

# Expose a port

EXPOSE 8000

# Define environment variables

ENV ENV_VAR_NAME=value

# Set the entry point or default command

CMD ["python3", "app.py"]

In this example:

First, it will create a base image of the ubuntu

ubuntu:latestWe set the working directory inside the container to

/app.We copy the application files from the current directory on the host machine to the

/appdirectory inside the container.We update the package lists and install Python 3 and pip3. We also install the dependencies specified in a

requirements.txtfile using pip3.We expose port 8000, allowing it to be accessible from the host or other containers.

We define an environment variable

ENV_VAR_NAMEand assign it a value.Finally, we set the default command to run when the container starts. In this case, it runs

python3app.py, assuming there's anapp.pyfile in the/appdirectory.

After writing the docker file save it to a file named Dockerfile to build an image use this command docker build -t myimage:latest . It will create a docker image for you.

So, Once the docker image is created then after that we can create a docker container with the help of the

docker run command, Docker container is our final output in the docker lifecycleYou can share this docker image anywhere in the world with the help of public registry like dockerhub and they can download the application without installing any single dependency because docker image has already those things, they can execute this docker image and create a docker container and they can run it on any platform.

If you manually do all these things every time like downloading the application code , downloading the required dependency and exposing the port and finally run the application it will take lots of time.

This complexity problem to run the application is solved by docker, it reduces the workflow a lot instead of doing lots of manual things you can simply execute the docker container and all things will be done .